In today’s data-driven world, organizations collect information from a multitude of sources, including databases, applications, sensors, and social media. This raw data is often siloed, inconsistent, and unsuitable for direct analysis.

If all these tasks are only one-time things, we can deal with them with web user interface, which is a user-friendly and more intuitive approach. Unfortunately, it is NOT. Data quality and management are continuous tasks for data professionals like engineers and architects.

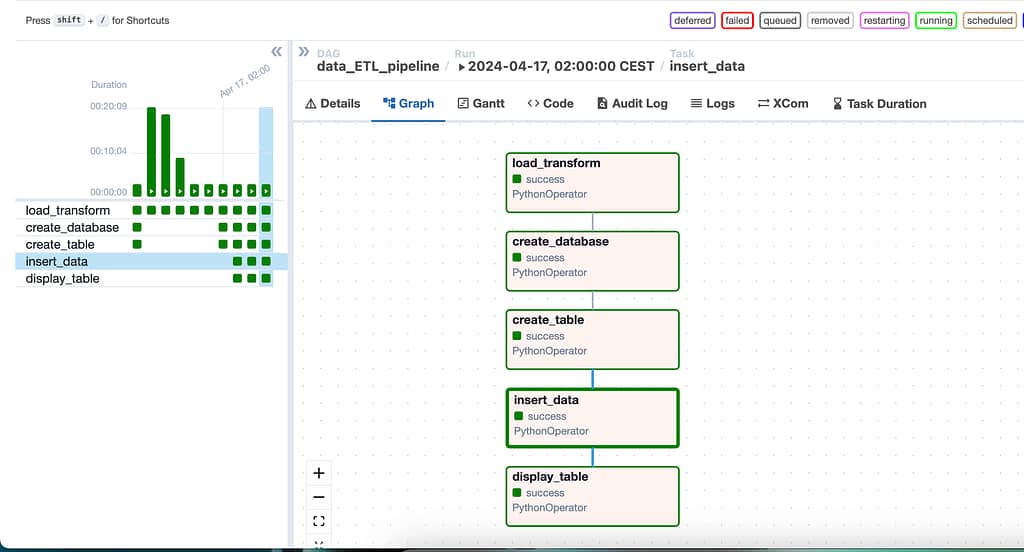

That’s why we embrace the data pipelines. With pipeline, we can automate the process of extracting data from various sources, transforming it into a consistent format, and loading it into a target system (data warehouse, data lake, etc.) for analysis. This integration creates a unified view of your data, enabling comprehensive insights.

Introduction

This pipeline automates the process of preparing and loading data for analysis. It runs daily using cron.

1. Data Extraction and Transformation

- The pipeline starts by loading data from a local CSV file.

- Then it cleans and transforms the data:

- Missing values are removed.

- A new “sales” column is created (UnitPrice * Quantity).

- The “InvoiceDate” column is converted to a datetime format for easier analysis.

The raw data looks like this:

InvoiceNo,StockCode,Description,Quantity,InvoiceDate,UnitPrice,CustomerID,Country

536365,85123A,WHITE HANGING HEART T-LIGHT HOLDER,6,12/1/10 8:26,2.55,17850,United Kingdom

536365,71053,WHITE METAL LANTERN,6,12/1/10 8:26,3.39,17850,United Kingdom

536365,84406B,CREAM CUPID HEARTS COAT HANGER,8,12/1/10 8:26,2.75,17850,United Kingdom

536365,84029G,KNITTED UNION FLAG HOT WATER BOTTLE,6,12/1/10 8:26,3.39,17850,United Kingdom

536365,84029E,RED WOOLLY HOTTIE WHITE HEART.,6,12/1/10 8:26,3.39,17850,United Kingdom2. Data Storage

- A new SQLite3 database is created.

- A table called “online_retail” is created within the database to store the transformed data.

3. Data Loading

- The transformed data is inserted into the newly created table(“online_retail”).

4. Verification

- Finally, the pipeline verifies that all tasks completed successfully.

- It displays the first 20 records of the table for a quick overview of the loaded data.

Tech Stack

- Python

- Airflow

- Sqlite3