What is Airflow?

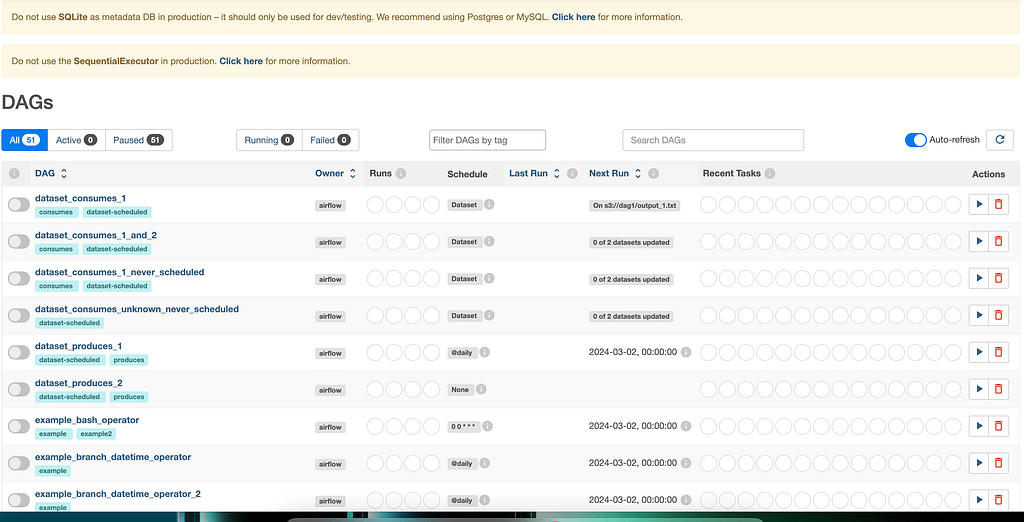

Apache Airflow™ is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows.

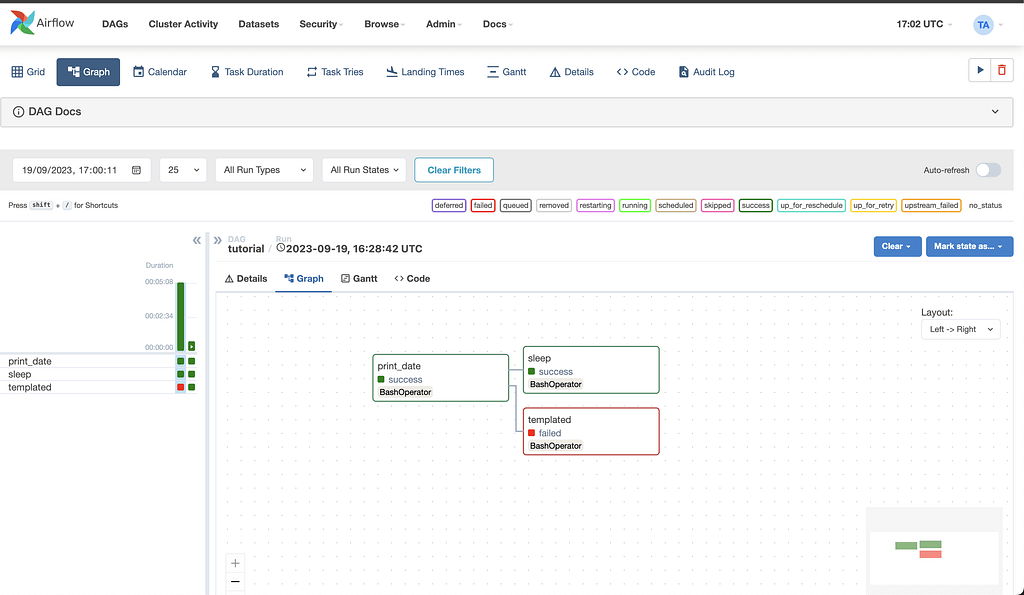

It allows you to define a directed acyclic graph (DAG) of tasks, where each task represents a unit of work, and the dependencies between tasks define the order in which they should be executed. Airflow is commonly used for orchestrating complex data workflows, managing ETL (Extract, Transform, Load) processes, and automating various data-related tasks.

Workflows as code

from datetime import datetime

from airflow import DAG

from airflow.decorators import task

from airflow.operators.bash import BashOperator

# A DAG represents a workflow, a collection of tasks

with DAG(dag_id="demo", start_date=datetime(2024, 1, 1), schedule="0 0 * * *") as dag:

# Tasks are represented as operators

hello = BashOperator(task_id="hello", bash_command="echo hello")

@task()

def airflow():

print("airflow")

# Set dependencies between tasks

hello >> airflow()The main characteristic of Airflow workflows is that all workflows are defined in Python code. “Workflows as code” serves several purposes:

- Dynamic: Airflow pipelines are configured as Python code, allowing for dynamic pipeline generation.

- Extensible: The Airflow™ framework contains operators to connect with numerous technologies. All Airflow components are extensible to easily adjust to your environment.

- Flexible: Workflow parameterization is built-in leveraging the Jinja templating engine.

What is for?

Airflow™ is a batch workflow orchestration platform. The Airflow framework contains operators to connect with many technologies and is easily extensible to connect with a new technology. If your workflows have a clear start and end, and run at regular intervals, they can be programmed as an Airflow DAG.

- Workflows can be stored in version control so that you can roll back to previous versions

- Workflows can be developed by multiple people simultaneously

- Tests can be written to validate functionality

- Components are extensible and you can build on a wide collection of existing components

Rich scheduling and execution semantics enable you to easily define complex pipelines, running at regular intervals. Backfilling allows you to (re-)run pipelines on historical data after making changes to your logic. And the ability to rerun partial pipelines after resolving an error helps maximize efficiency.

Key features of Apache Airflow

- DAGs (Directed Acyclic Graphs): Workflows in Airflow are defined as directed acyclic graphs, where nodes represent tasks and edges represent dependencies between tasks.

- Task Dependencies: Airflow allows you to define dependencies between tasks, ensuring that tasks are executed in the correct order based on their dependencies.

- Dynamic Workflow Generation: Workflows in Airflow are defined as code, allowing for dynamic generation and modification of workflows based on parameters or conditions.

- Extensibility: Airflow has a modular architecture, and users can extend its functionality by creating custom operators, sensors, hooks, and executors.

- Scheduler: Airflow comes with a scheduler that can be used to trigger workflows based on a specified schedule (e.g., hourly, daily) or external events.

- Logging and Monitoring: Airflow provides a web-based user interface for monitoring the progress of workflows, inspecting logs, and viewing historical run information.

- Integration with External Systems: Airflow can easily integrate with various external systems and services, making it versatile for different use cases.

- Parallel Execution: It supports parallel execution of tasks, allowing for efficient utilization of resources.